How To Detect And Learn From What The Big Companies Are A/B Testing

We can learn a lot from what leaders are experimenting with by simply observing what's publicly visible to us. This idea was somewhat inspired after reading Robert Green's Mastery which reinforces the importance of passive observation as the first phase of an apprenticeship before moving onto practice. Taking this seriously, since the start of this year I began detecting and publishing a/b tests from the biggest companies I could think of. Recently people also started asking me: how do you discover these interesting experiments while also showing interest in sharing their discoveries. Hence I wrote this guide to inspire more leaked a/b test discoveries. I also hope that this will encourage people to share their leaked a/b tests with us to publish and learn faster together. :)

Step 1: Choosing A Company And URL To Observe

What we want to do is detect an active controlled experiment - that is having some evidence that a selected company is actively randomizing us (the visitors) into variations with some interesting UI change. To do so we observe 1) companies that run many experiments and 2) companies that have immense statistical power in detecting tiny UI changes. Our current list of companies that we watch includes the following (but doesn't have to be limited to): Airbnb, Amazon, Booking, Bol, Etsy, Facebook, Google, Lyft, Netflix, Uber, and Zalando.

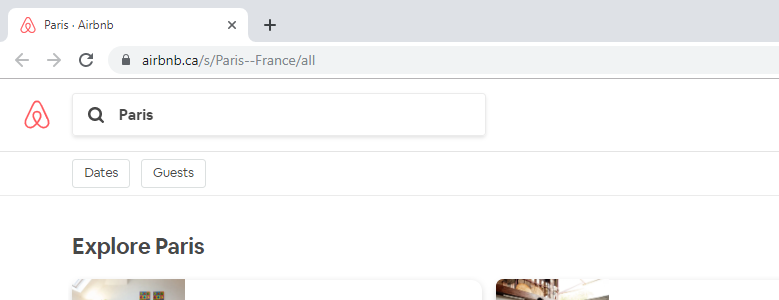

As our example, we'll have a look at Airbnb's city listing page: www.airbnb.ca/s/Paris--France/all. So as the first step, we'll just use Chrome to open up that link. Nothing interesting yet.

USEFUL CHROME EXTENSION FOR RESIZING: Window Resizer. Because we'll be making more of these comparisons and taking screenshots in the future, it's a good idea to use a standardized screen resolution. Ex: a 1440 or 1600px width (full width screenshots can be too big).

Step 2: Checking For Active Experiments

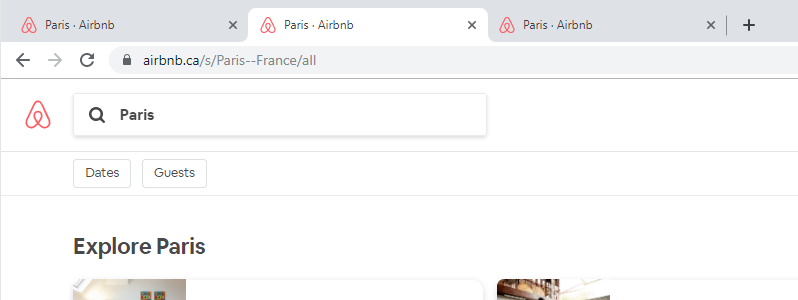

Now the fun part begins. Here we want to check if there are any active experiments running. So we'll open up a few more tabs to make comparisons and see if anything is changing. Because often these companies use cookies or local storage to assign us to an experiment variation, we simply clear cookies, refresh a page and check if anything has changed. We do this a handful amount of times to get a sense of the experiment structure - if at all.

If there are no changes, well that means that perhaps there are no experiments actively running on that give page or segment. And we might come back in a week or so.

If there are UI changes between different variations (often stored in cookies), well then this receives our attention. To make sure that the change isn't just a result of some randomization algorithm, we might try an refresh the page once or twice (without clearing cookies).

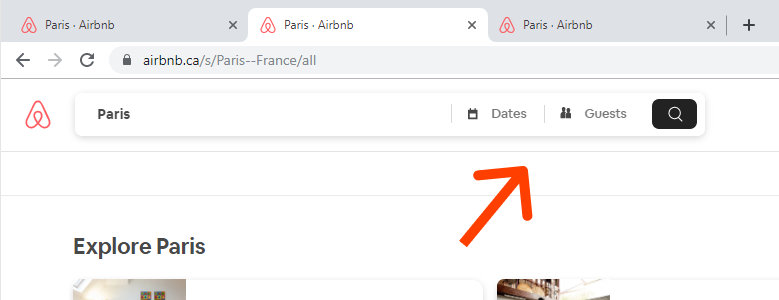

Step 3: Bingo - We Have An Experiment - Taking Screens

Occasionally we will discover something interesting, as in this active case below where Airbnb is actively testing different search bars. :) Now we want to capture both variations in some folder for future reference. So we take screenshots of all the variations. (ex: I use Snagit)

Step 4: Detecting The Decision

Since this is an active experiment, it's just a matter of time before the a/b test completes and an implement or reject decision is made. To detect the decision we'll check back on the same page a week or month later. Of course we repeat the process of clearing cookies again a number of times to make sure that the experiment is finished. And there you have it, this is how we learn from what leading companies are experimenting with. Hoping you can do the same and then share some of your leaked a/b tests with us to publish. :)

How Useful Are The Leaks?

Given that we don't have full visibility of the experiments and are just scratching the tip of the iceberg, some people might wonder how useful this information is. My answer is that although these leaked a/b tests are subtle evidence (for/against particular changes), it is evidence nevertheless. And evidence should be remembered and combined which we, of course, do when we add up all evidence under patterns. In a nutshell, we're interested in those patterns that reproduce across companies - increasing their probability that they are good UI changes for others to design or experiment with in the future.

Want To Help Detect Experiments And Speed Up Our Learnings

I'll repeat one more time that we are open to publishing those leaked a/b tests that you detect and build a community of people who'd like to learn quicker. :)

Jakub Linowski on Oct 10, 2019

Jakub Linowski on Oct 10, 2019

Comments