Should You Always Be Running A/B Tests?

There are some big companies out there that seem to be increasing their velocity and volume of experiments. And then there are the smaller ones that might run a handful of sporadic a/b tests. So I ask: should we always be finding experiments to run no matter what, or are there good reasons to slow down or pause experimentation altogether?

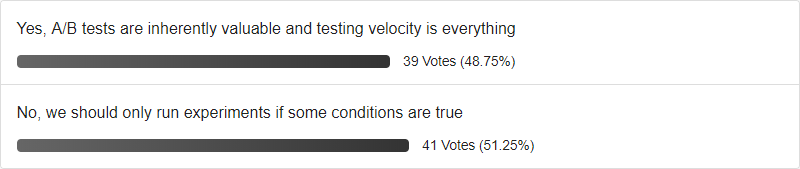

Your Opinion [Poll Closed]

When would you run experiments, and when would you not? To make things more interesting, please share your thoughts as to why you voted in a particular way as a comment.

Three Checks On When To Run Experiments [Updated Oct 19]

Thank you all for casting your votes. The poll results look rather even which might be indicative that both answers have some truth to them. With that, here is my opinion on when we should be running a/b tests.

First Check: Do You Need More Confidence To Make A Decision?

The first way to tell if you need to experiment is to ask yourself if you need any additional confidence at all to make a decision. Chances are that you might have already decided on making a UI change in the first place. Perhaps you're suggesting a bug fix, or made a business decision, or are simply launching something new that does not yet exists. Or perhaps you have higher confidence from relying on past experiments and prior probabilities to make decisions (it's a powerful advantage).

And then for all other ideas, most likely you have no existing or very little confidence, for which you hunger. This is the first trigger in favor of designing and execute an a/b test. When you run experiment, your confidence begins to shift away from a neutral state (for better or for worse).

Second Check: Will You Detect An Effect With Enough Confidence To Make A Decision?

As you start running a/b tests you'll soon notice that answers are not as black and white as you think - experiments generate confidence ranges and probabilities that fluctuate. For this, you set your own confidence thresholds and criteria that is good enough for you to make a decision. Bigger companies with bigger traffic tend to set higher standards for what they consider good enough. And smaller companies may need to lower their confidence thresholds to make decisions quicker. Whatever your criteria, it's a good to make it explicit before starting you experiment.

Third Check: You Run Experiments If The Pay-Off Is Greater Than The Cost

Of course experimentation also has an associated cost. Hence there needs to be a check on how much it costs to run experiments and your return on investment from possible experiments. This could be calculated for a single experiment or for a series of tests in a more long term optimization program. If the possible gain is higher than the possible loss, then you have your third green light to move forward with testing.

So Should You Always Be Running A/B Tests?

Although I strongly believe in experimentation, my opinion is that all three conditions have to be met for a company to start doing so.

I assume that a lot of the poll respondents answered positively to the "inherent value of a/b testing" because for them the ability to detect smaller effects confidently with a large pay-off is very high. For those respondents, both conditions most likely will be true for a long time. Even if particular screens and flows become optimized (as they do over time), these individuals can also shift to other areas to continue experimenting with.

Smaller companies can also engage in optimization and experimentation, however they need to check if it makes sense for them to do so more regularly - and that's probably more reflective of the people who answered with a "no". One advantage that smaller companies hold over larger ones, is that their screens tend to be less optimized, with a higher upside. And higher effects in turn are easier to detect confidently. One powerful strategy that smaller companies can do this is by combining multiple high probability changes based on past experiments.

Jakub Linowski on Oct 04, 2018

Jakub Linowski on Oct 04, 2018

Comments

David 6 years ago ↑0↓0

I'd like to vote for both answers! We're always trying to test as much as we can but at the same time, we only test where we think it makes sense (some conditions are true).

We typically don't run more than one test per funnel and we only test on pages where we have the traffic and conversion to support it.

Reply

Andres Lucero 6 years ago ↑2↓0

In my experience, many companies simply don’t have the traffic to run multiple concurrent—or even consecutive—experiments in a month. Given the resources needed to research & identify areas for improvement, create a strategy & hypothesis for an experiment, and then design & develop the experiment itself, perpetual testing may not even be financially viable.

Every company is different, so each one has to be evaluated on their own terms and the realities of their business need to be taken into consideration.

Reply