Is It Correct To Make Multiple Design Changes In A Single Test Variation?

As you look and analyze a given UI screen or flow, design ideas of how you might improve it rush into your consciousness. Here you are faced with two general approaches: you either a/b test each change individually, or you group some of them together into a single variation. Which is right?

Your Opinion [Poll Closed]

My Thoughts & Experience [Updated Sep 27, 2018]

First of all, thank you for voting everyone! I'm surprised we received that many votes. Thanks again!

As for the results, it clearly looks like the more people are in favor of isolating a single change within an experiment. This I think is often motivated by a desire to understand if a given change has an impact, or not (as seen in the comments below). Alternatively, grouping multiple changes together in a single variation is done with the hope of striving for a higher overall impact. This is done on the assumption that most of the changes are positive and stack up. Of course the reality is also that multiple changes run the risk of cancelling each other out (with some being negative and some positive).

How Do Bigger Vs Smaller Tests Compare In Terms Of Impact?

To answer the above question, we should look at evidence how larger tests (with multiple changes) compare to smaller tests (with isolated changes). Luckily we have some data on this.

For a number of years we've been running larger tests for our clients and writing about it under the GoodUI Datastories project. Some of these stories include retests from failed attempts so the median impact is slightly inflated. Nevertheless when we look across 26 such projects, we have a median impact of 23%.

Now in comparison, we have also been collecting more isolated test results as patterns. Currently, we have published 159 a/b tests that are smaller with more isolated changes with a median impact of 6.6%.

This comparison is our first signal validating the potential of grouping changes into single variations.

Should Make As Many Changes As We Can Think Of?

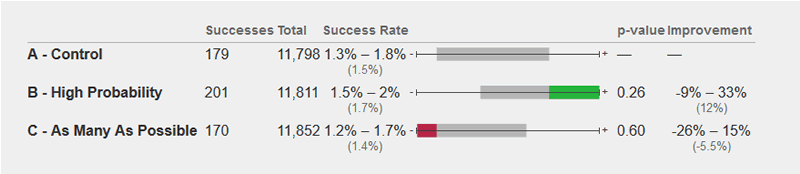

Quite recently were were also privileged to run an interesting experiment on a landing page for a premium service of an online driving school. I just want to focus on the high level test setup which was designed in the following way:

- A) CONTROL

- B) ONLY HIGH PROBABILITY CHANGES (BASED ON PAST TESTS)

- C) AS MANY CHANGES AS THE TEAM COULD THINK OF

As you can imagine, the results were the following:

This suggestive test (it was stopped early for external business reasons) is a subtle demonstration that "more changes" are not necessarily better as seen in the C variation. Instead, the B variation combined only a handful of changes based on already tested patterns (all with net positive probability). Essentially were using positive past test results to decide which changes to group together. Such and approach taken in the B variant outperformed both C and A.

Summarizing This As Principles

Taking all of the above into consideration, we have come up with the following two guiding principles to be used on our projects:

THEN: GROUP HIGH PROBABILITY IDEAS INTO 1 VARIATION

THEN: ISOLATE THE CHANGE INTO 1 VARIATION

To make things more interesting, please also share your thoughts as to why you voted in a particular way as a comment. Perhaps there are cases when both answers are true? Let's talk about it.

Jakub Linowski on Sep 24, 2018

Jakub Linowski on Sep 24, 2018

Comments

Joerg 6 years ago ↑2↓1

In my experience multiple changes in one test are fine as long as all of them aim at the same hypothesis. Especially with lower traffic sites our test strategy is more to confirm the right hypothesis instead of the incremental impact of each individual change (which would take ages to get to significance levels given the low contrast between variations with subtle design changes only)

Reply

Adrian 6 years ago ↑1↓0

It's perfectly OK to group mutiple changes into a single variation. It gives you a greater chance to get bigger lifts. Generally, small changes = small gains. Also, unless you have a lot of traffic, it can take too long to test mulitple variations/iterations to get the significance required to guage a real win. You need to go for 10%+ lifts in conversion because any lower, particularly in the 3 - 5% range, the winners often 'disappear' due to a number of reasons - natural variance/noise being just one.

Reply

Richard 6 years ago ↑0↓0

In my opinion both approaches are right and it all comes down to a purpose of given testing. I see two basic options - a) to enhance overall user flow / UX of given web page or b) to optimize performance of a page by enhancing certain website sections. In other words, if your goal is to fine-tune performance of specific website elements it should be more effective to test each of these changes separately. But if you try to enhance more complex behavior-based experience, I believe it would be necessary to make several connected changes that could cause that snowball effect of "attention to conversion" to happen. Complex problems usually don't have too simple solutions.

Based on that, I would try to answer the poll question by looking at the bigger picture and context of testing. If a company is trying to fine-tune some page elements (by testing every change separately) it means that it stays on their set course and usually the outcome could be sort of significant (several % to few tens of % maybe?). The question is, is it worth it? To invest in all of these changes, testing them out, analyze, etc to just gain several % of performance. How big the company have to be, to make that few % matter in monthly purchases (or signups etc.)?

On the other hand if a company is trying to come up with a new/updated approach to their page visitors, it might suggest that they are trying to stay up-to-date with current standards and/or come up with a new ideas/better concepts to present themselves or their product. If done right, this might bring the company presentation to a new level and create the buzz for their business and also increase conversions. I believe this approach is more effective in a long-run.

Test separately only essential parts or problems in your web page to make them work "at least" on OK/well level, but overall focus on opportunities to enhance UX of your page/website. With that in mind, I would try several design changes in a single test variation to increase performance of that attention-to-conversion snowball effect.

Reply

Neil 6 years ago ↑0↓0

Making multiple changes in one variant is not best practice as you really need to be able to determine the impact of each change - not all of them may show a positive uplift.

However, running an experiment between two wildly different experiences can help save time by confirming a new baseline, should the new experience win, to run future iterative changes on.

Reply

Vito 6 years ago ↑0↓0

Even a single change may consist in multiple micro changes. Still to better understand the cause a certain result, it is better to limit the variations.

Reply

Liz Pok Lynch 6 years ago ↑2↓0

I agree that in a perfect testing world, you'd want to isolate each variable. But in the imperfect real world, where you might need a very large impact very quickly, I think it becomes "ok" to change multiple things in a single test. It's critical that everyone understand that that's the case, however, so no one points to a single change and says that's what drove the response. If you really need to understand the impact of a single variable, then you'd better isolate that variable.

Reply

Bryce Lee 6 years ago ↑1↓0

For an optimization test, I would say no. But you can't optimize your way to a new design, so if you are redesigning the site, you can do a benchmarking test before and after, where everything has changed.

Reply

elisa 6 years ago ↑1↓0

Actually, it depends on the reason why those changes may occur. Is it to improve the UX based on data results (high abandon rate on a specific page)? Is it just based on assumptions ("I am sure this will look or work better if we make those changes")?

I believe that any requested change should be data driven (when available of course) vs. assumption based. Once an issue has been identified through GA or tools such as Hotjar for instance, the idea would be to implement the change(s) related to a specific issue. Then To analyze all the changes based on data, ideate, prioritize and test them is the best way to make sure that they will serve the users as much as they serve the business goals.

Making one or several changes at once really depends on the reason behind it. Sometimes one improvement can lead to several changes within the design. Therefore, both (yes and no) are valid.

Reply

Steve Place 6 years ago ↑1↓0

I would say generally, no however, it depends on what you're testing. Subtle design changes in a single variation could be okay but several copy or flow changes would not be okay.

Reply

Rob Spangler 6 years ago ↑6↓0

On an outdated page with multiple problems, it's simply too expensive to test individual updates. In that case, I'd suggest determining a winner and then going back through to test individual elements by priority.

On the other hand, you may have a page that's working generally well and needs several enhancements. If these are tested all at once in a single variation, you'll never know what update caused the difference. Perhaps your conversion went up 10% due to the CTA, but conversion went down 5% due to the heading. You'll only see +5%, but remain blind to the cause.

What do you think?

Reply

Jakub Linowski 6 years ago ↑1↓0

That's how I see it too. :) Will share my thoughts in a write up in a few days.

Reply

Rob Spangler 6 years ago ↑1↓0

Great write up! Thanks for following up with your thoughts and data.

Reply

Annand 6 years ago ↑2↓0

I think it's just important to understand the trade off of testing atomically or testing multiple changes at once. At that point, you can decide what makes the most sense for your tests.

Reply

Jakub Linowski 6 years ago ↑0↓0

Care to share your opinion of the trade offs? :)

Reply

Annand 6 years ago ↑2↓0

Sorry! Testing atomically provides more clarity around what is causing your result but I've found you'll also launch more tests that have no impact when you only test small changes at a time.

Reply