6 Tests By  Ronny Kohavi

Ronny Kohavi

Tests

Test #226 on

Microsoft.com

by  Ronny Kohavi

Feb 18, 2019

Desktop

Ronny Kohavi

Feb 18, 2019

Desktop

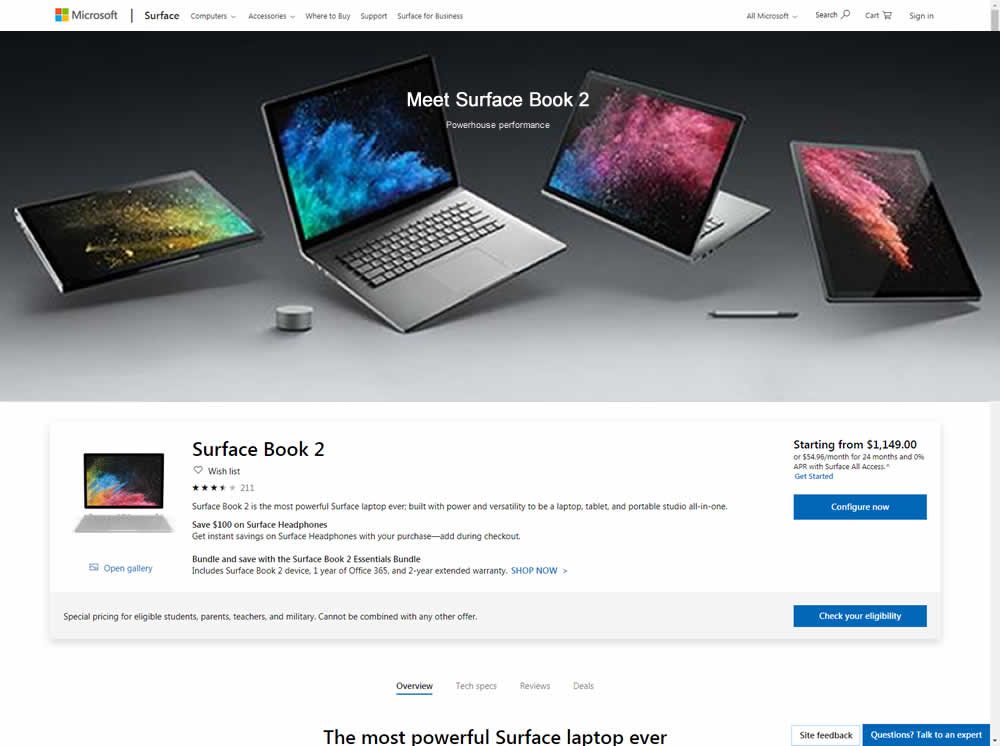

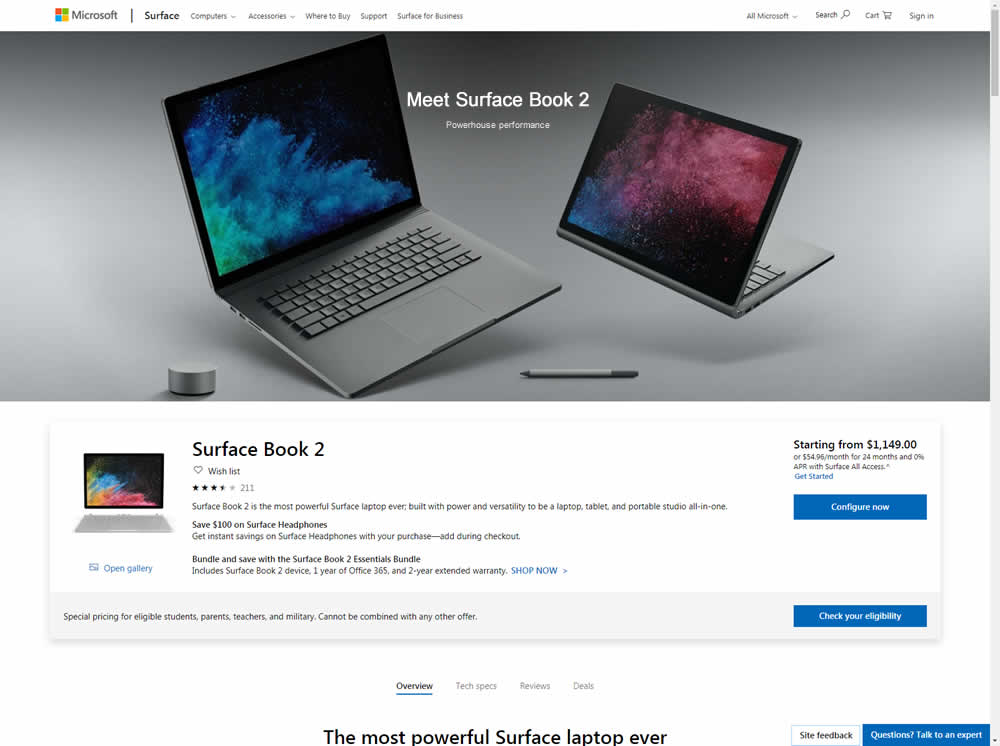

Ronny Tested Pattern #96: Single Focus Photos On Microsoft.com

Microsoft Store ran an experiment on the Surface Book 2 product page. The treatment showed a hero image with fewer, yet larger product photos

Test #221 on

Microsoft.com

by  Ronny Kohavi

Jan 27, 2019

Desktop

Ronny Kohavi

Jan 27, 2019

Desktop

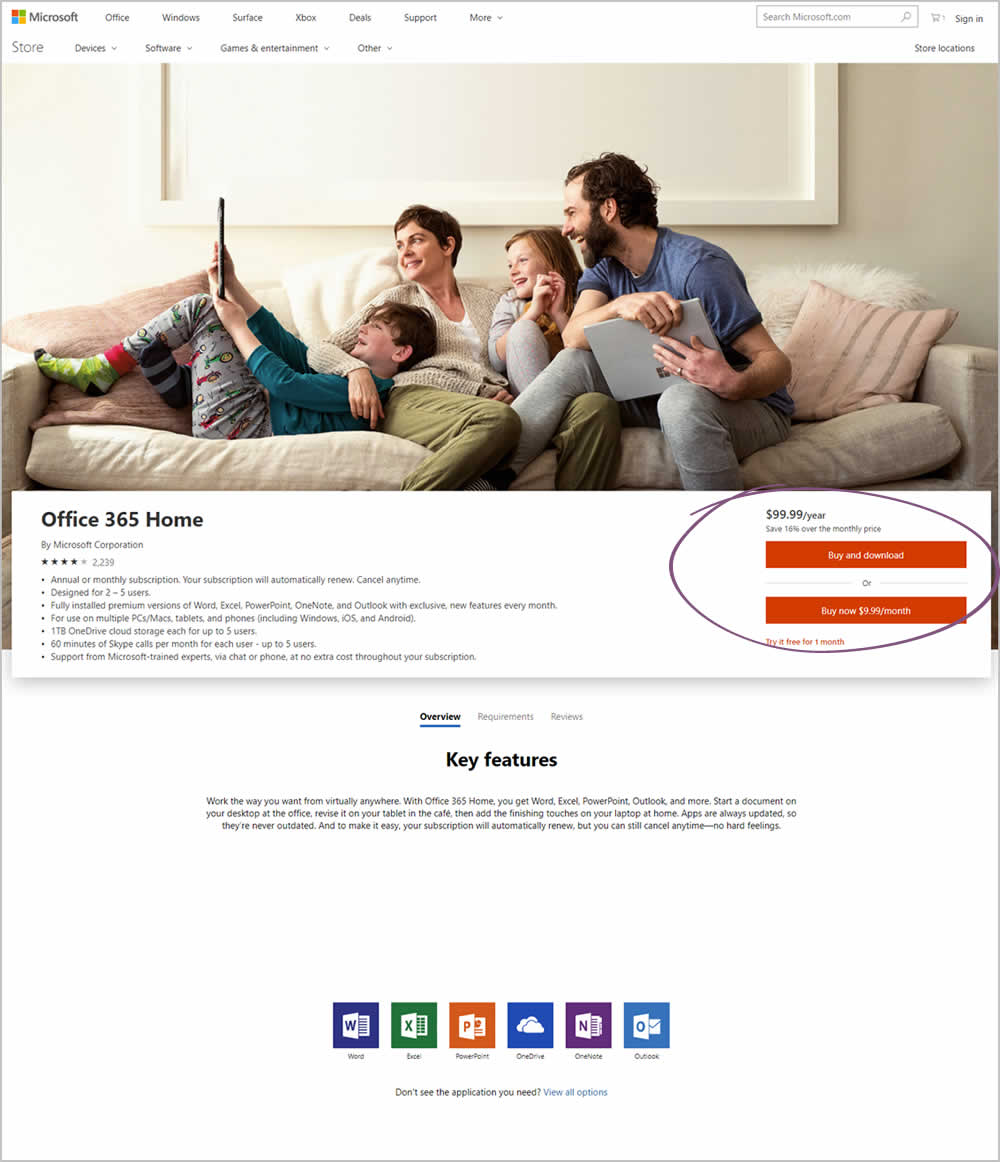

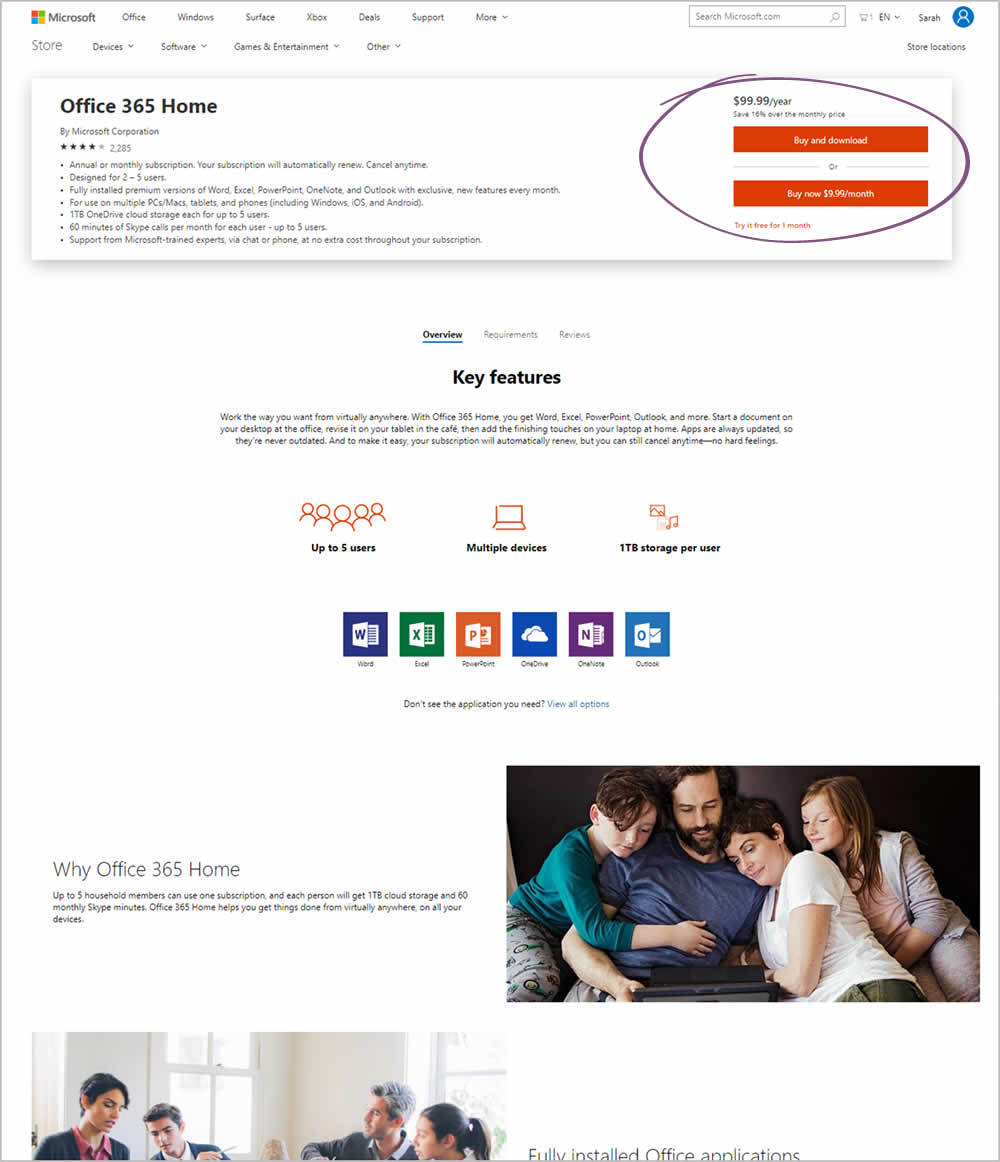

Ronny Tested Pattern #49: Above The Fold Call To Action On Microsoft.com

Microsoft Store ran an experiment on the Office 365 Home product page. The treatment raised the purchase calls to action higher by removing the hero image.

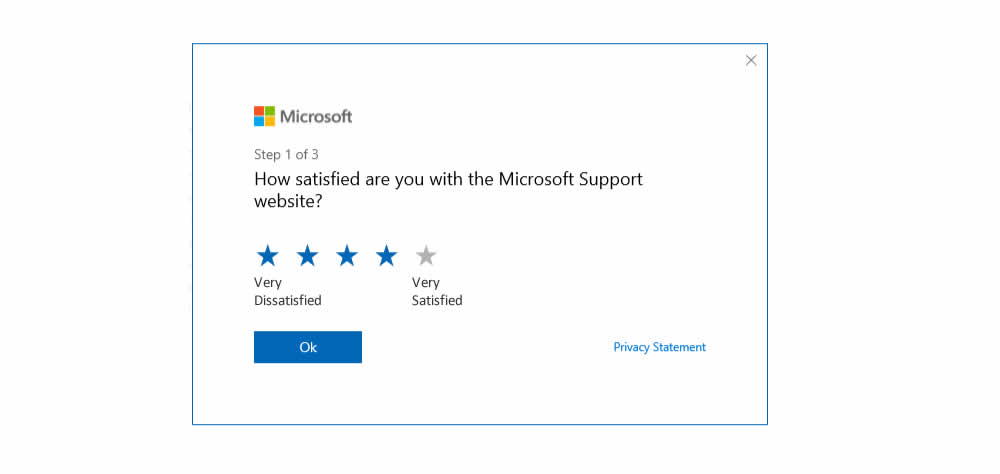

Test #216 on

Support.microsoft.co...

by  Ronny Kohavi

Dec 21, 2018

Desktop

Ronny Kohavi

Dec 21, 2018

Desktop

Ronny Tested Pattern #2: Icon Labels On Support.microsoft.co...

Microsoft ran an experiment on their Customer Satisfaction Survey at both support.microsoft.com and answers.microsoft.com (Desktop). The treatment contained two icon labels at the opposite sides of the star rating range (ex: Very Dissatisfied and Very Satisfied) - providing it with additional meaning.

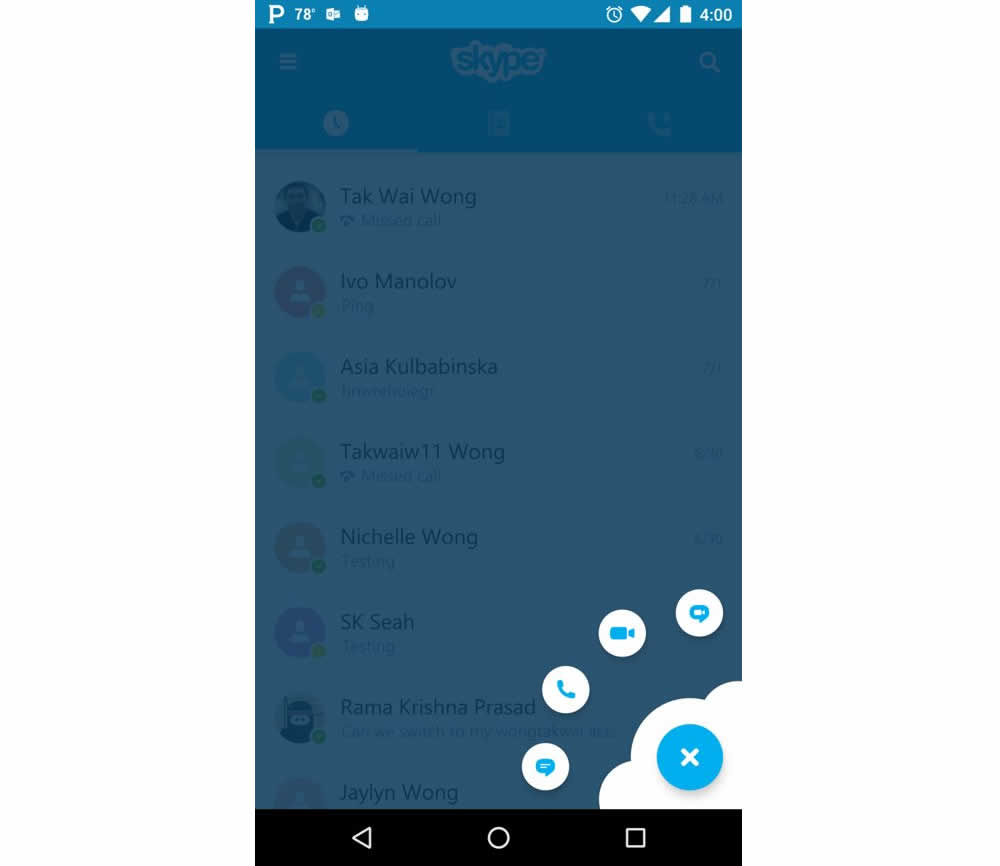

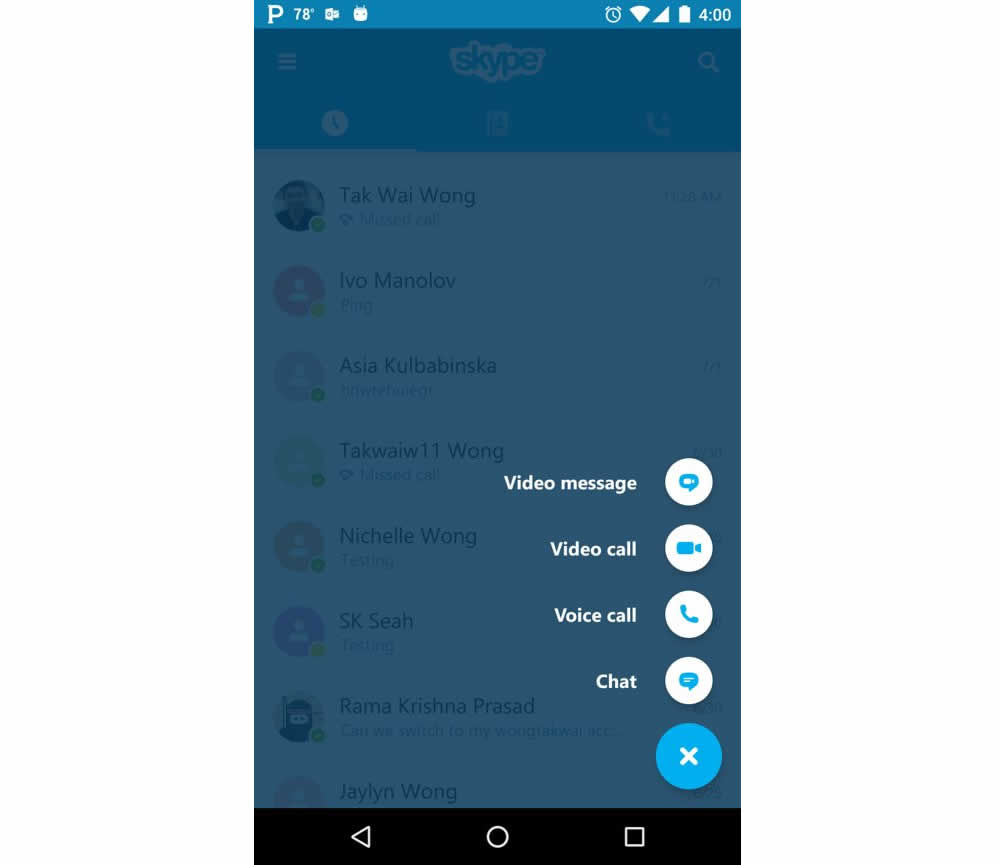

Test #211 on

Skype App

by  Ronny Kohavi

Nov 20, 2018

Mobile

Ronny Kohavi

Nov 20, 2018

Mobile

Ronny Tested Pattern #2: Icon Labels

Microsoft Skype ran an experiment for the mobile segment of the Skype application with a treatment having combined icons with corresponding labels. The control only showed icons.

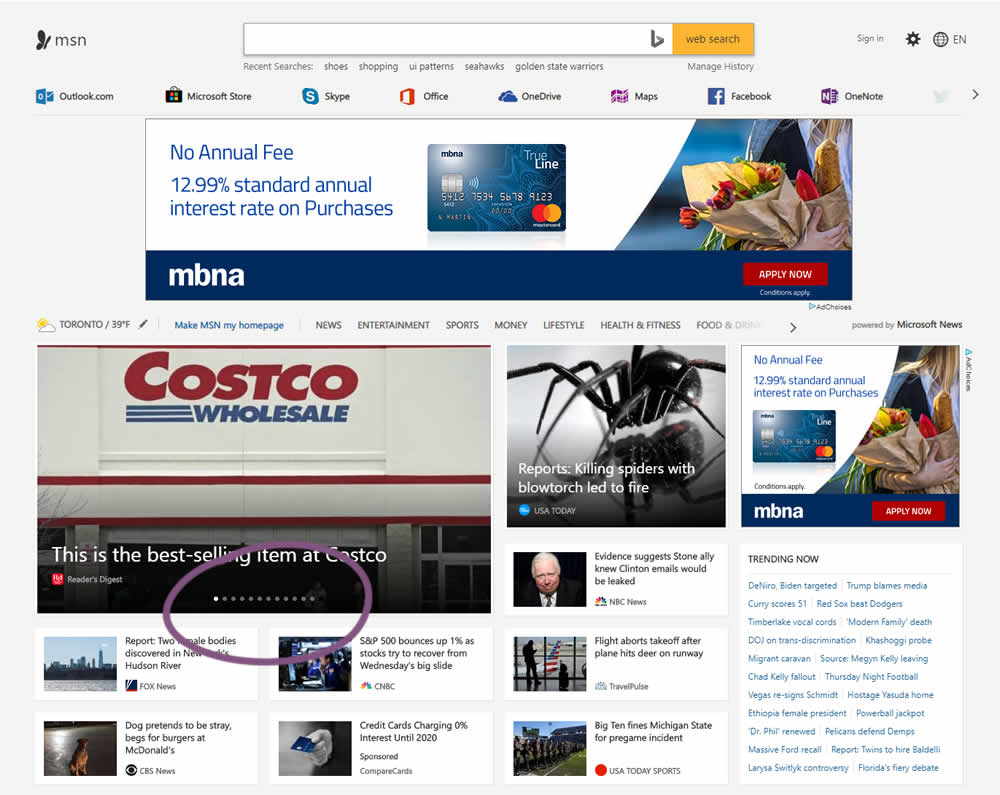

Test #205 on

Msn.com

by  Ronny Kohavi

Oct 25, 2018

Desktop

Ronny Kohavi

Oct 25, 2018

Desktop

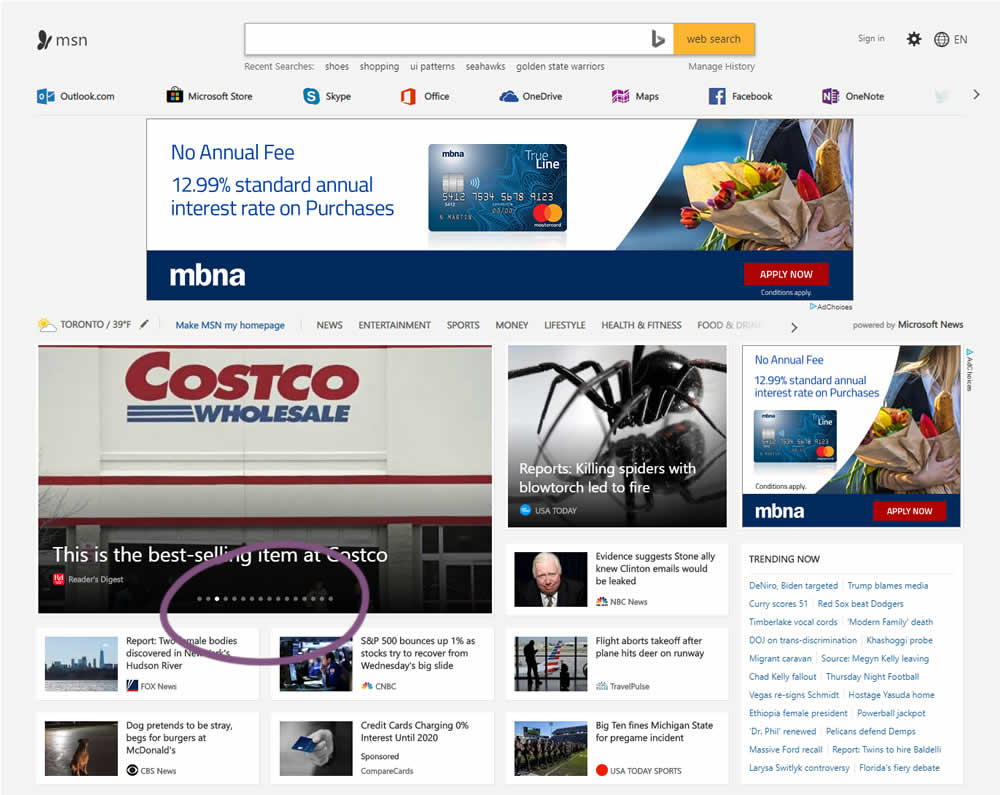

Ronny Tested Pattern #36: Fewer Or More Results On Msn.com

In this experiment, the carousel items were increased from 12 to 16.

Test #133 on

Bing.com

by  Ronny Kohavi

Dec 13, 2017

Desktop

Mobile

Ronny Kohavi

Dec 13, 2017

Desktop

Mobile

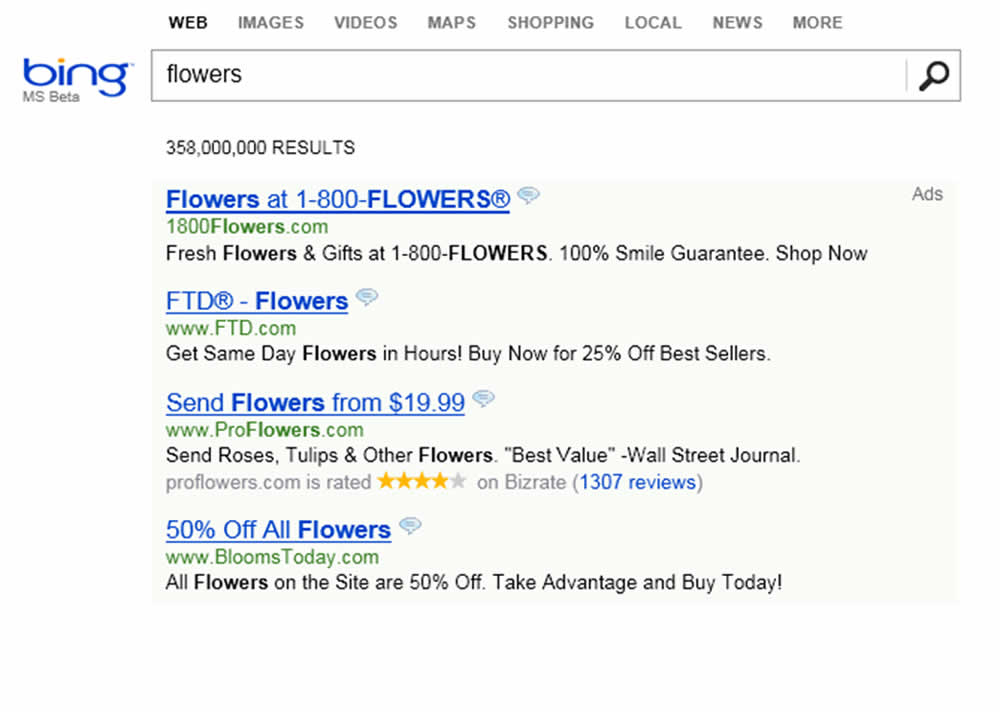

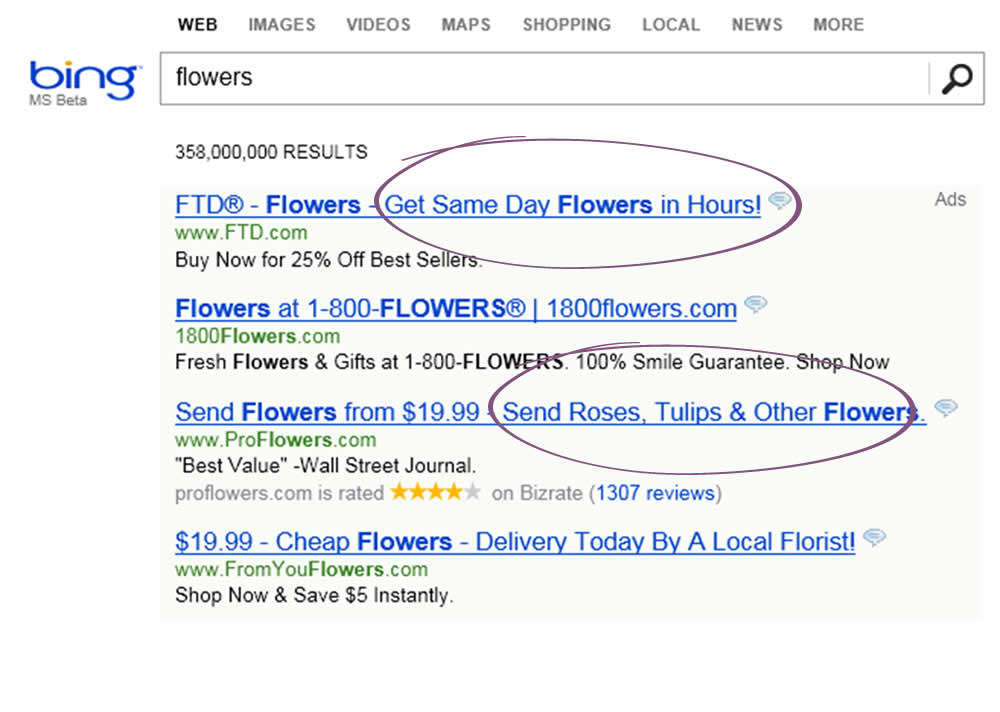

Ronny Tested Pattern #43: Long Titles On Bing.com

In 2012 a Microsoft employee working on Bing had an idea about changing the way the search engine displayed ad headlines. Developing it wouldn’t require much effort—just a few days of an engineer’s time—but it was one of hundreds of ideas proposed, and the program managers deemed it a low priority. So it languished for more than six months, until an engineer, who saw that the cost of writing the code for it would be small, launched a simple online controlled experiment—an A/B test—to assess its impact. Within hours the new headline variation was producing abnormally high revenue, triggering a “too good to be true” alert.

HBR, September–October 2017 Issue, https://hbr.org/2017/09/the-surprising-power-of-online-experiments

Note: This experiment was a solid success and replicated multiple times over a period of months. It worked at Bing and had a profound influence. The only reason why we atributed a 0.25 point (a "Maybe") was because we don't have the exact sample size and conversion data.