40 LinkedIn Poll Results On Experimentation

Over the last two years, I’ve run more than 40 LinkedIn polls on topics related to experiment design. The goal was to test, challenge, and refine common beliefs—and to engage more experienced practitioners in open discussion.

While no poll is perfect and many answers deserve nuance, I hope these results serve as useful starting points for deeper investigation. The respondents came from a mix of backgrounds, including in-house experimentation teams, platform builders, and agency practitioners.

Taken together, these polls offer a popular snapshot of how the experimentation community thinks about a range of topics —

and a springboard for exploring them further, including in the live course I teach on experiment design.

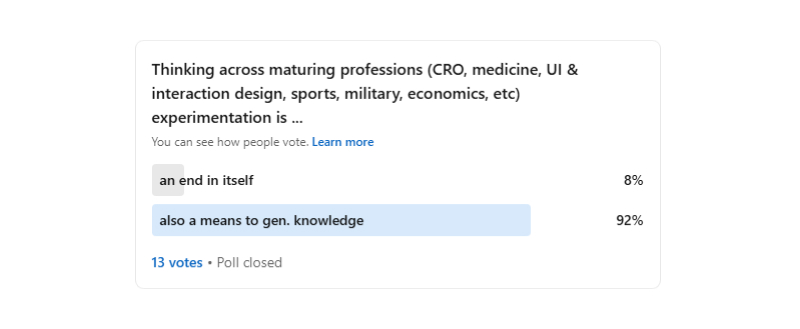

Poll 01: Is experimentation an end in itself, only supporting us at making that one better decision after our awesome test finishes? Or are we better off as professionals, humans, society in encoding, correcting, refining and transforming quality experiments as general and predictive knowledge (including meta analyzing replications and making cross experiment comparisons). Examples of knowledge: causal diagrams, theories, predictions, estimations, best practices, rules of thumb, heuristics, patterns (dynamic and probabilistic) and observations.

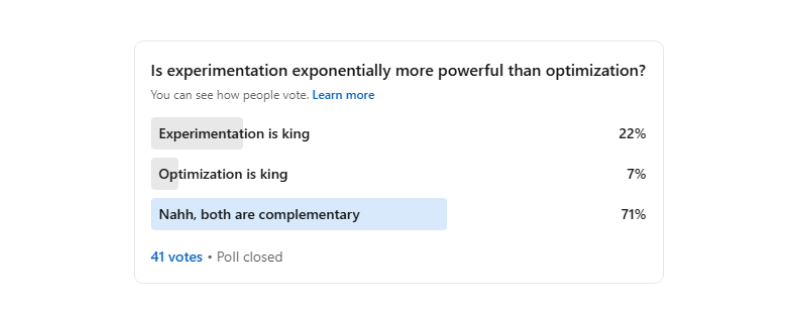

Poll 02: To polarize or not to polarize.

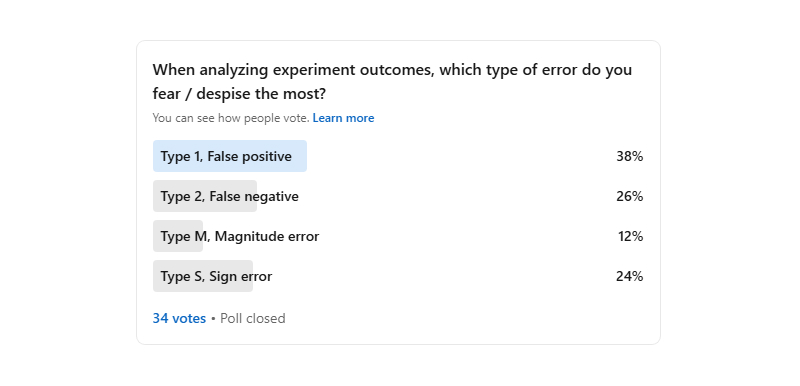

Poll 03: Which of these 4 types of errors do you despise the most?

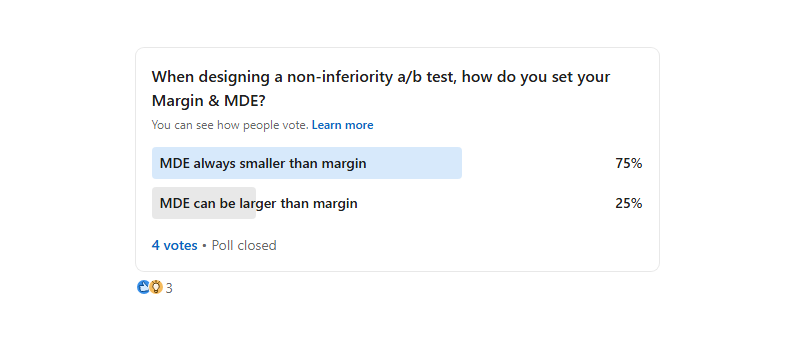

Poll 04: When designing a non-inferiority test can the MDE be larger than the margin?

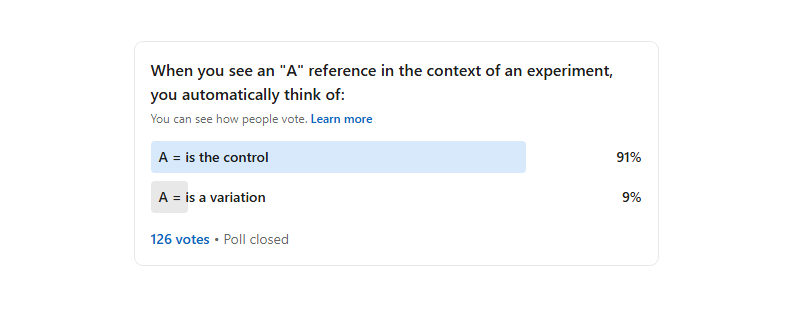

Poll 05: What's the first thing that comes to mind when you see an "A" reference in the context of experimentation? (Brainless Saturday experimentation poll) :)

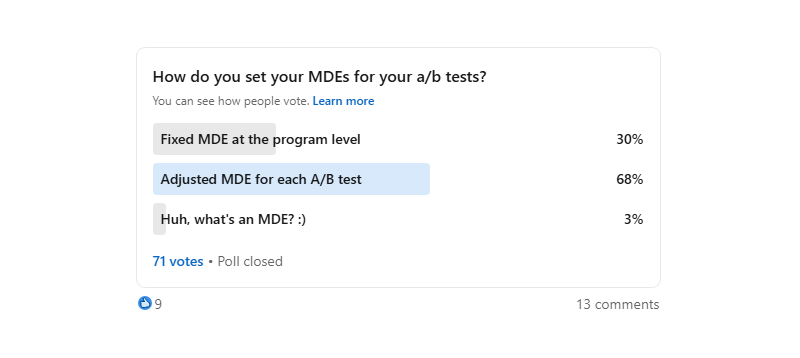

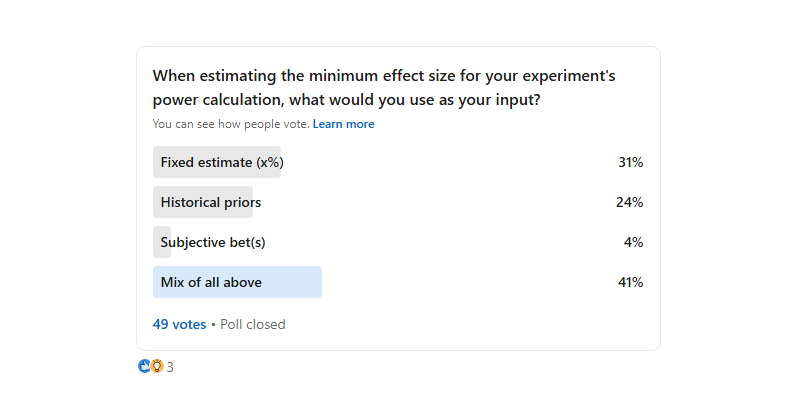

Poll 06: When designing experiments, how do you set your MDE (minimum detectable effect or minimum effect of interest) to power up your a/b tests? 1. Are they fixed and handed down to you at the program or team level (and you always work with the same MDE value between multiple tests)? 2. Or do you adjust them for each A/B test (perhaps some are bigger/smaller based on whatever factors, beliefs, historical data, expectations, or inputs you come across)?

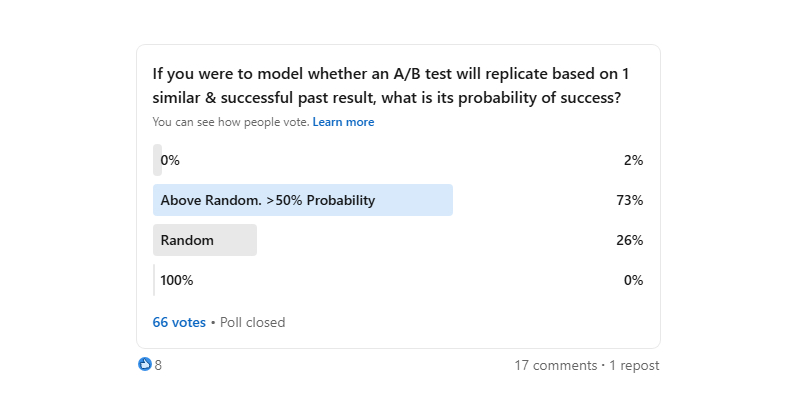

Poll 07: What will the probability of successfully replicating someone else's A/B test be?

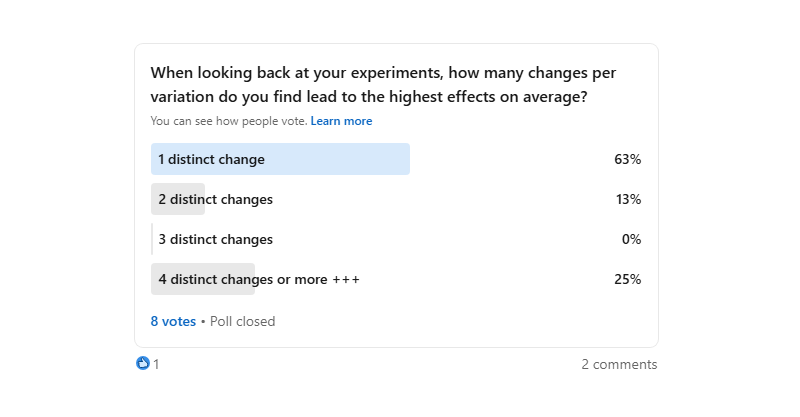

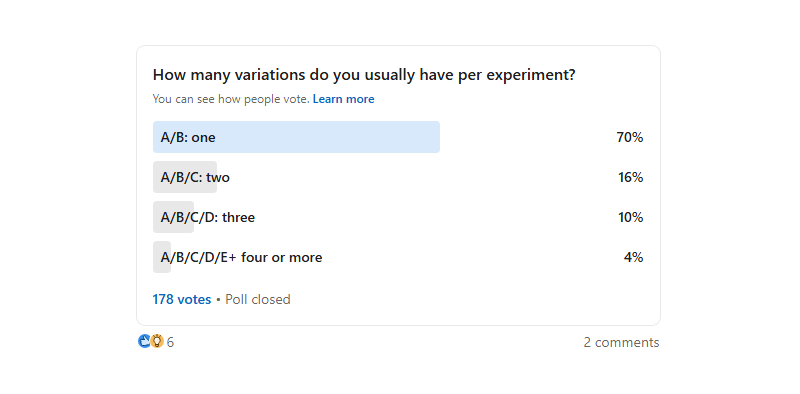

Poll 08: Looking at the complexity of your experiments, how many changes per variations leads to the highest POSITIVE effects on average?

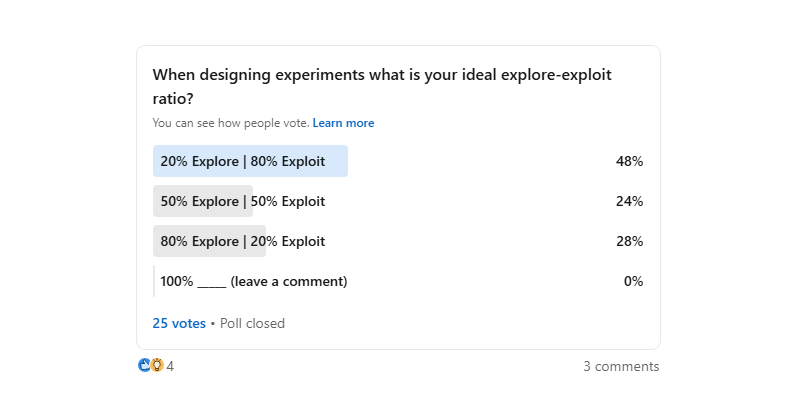

Poll 09: When designing experiments, what does your ideal EXPLORE / EXPLOIT ratio look like? Purely exploratory experiments are ones you have no previous quant/qual information about, touching randomness (if that's even possible). Exploit experiments are fueled by data, insights, past tests, knowledge. These may also include iterations and replications.

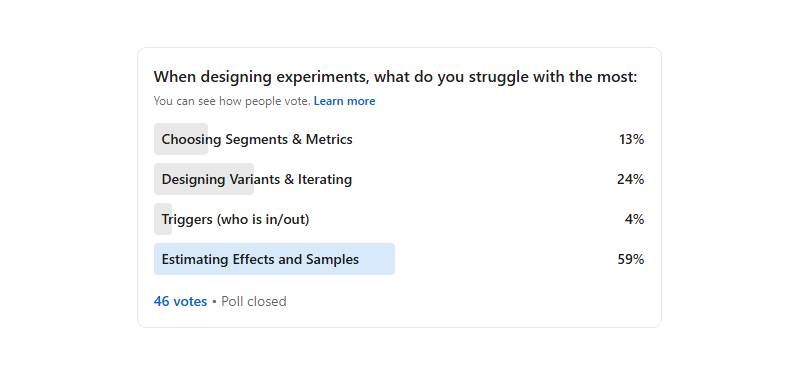

Poll 10: When designing experiments and a/b tests, what do you struggle with the most ...

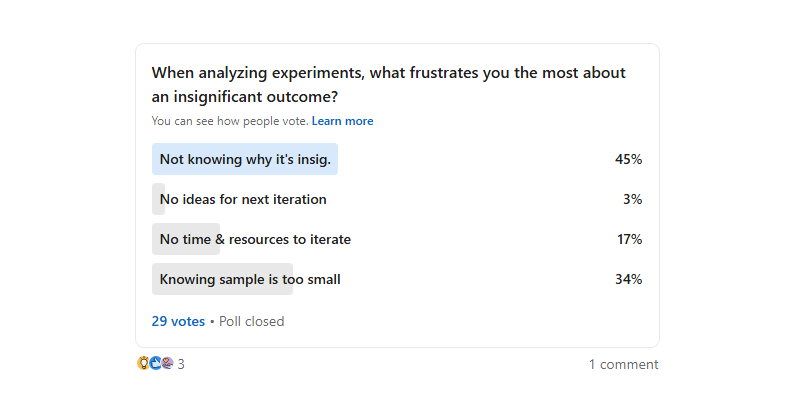

Poll 11: Given that a large portion of people in experimentation may be frustrated at times by an insignificant outcome (I feel the pain), what's the cause of this frustration?

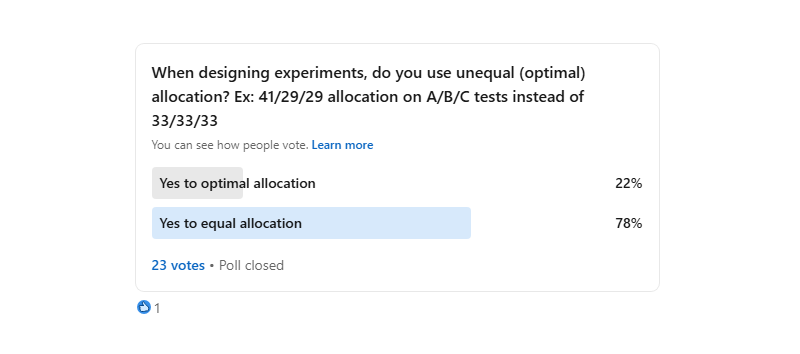

Poll 12:

Theoretically, designing experiments with unequal allocation of control/variation (where the number of variants is 2 or higher) provides us with more efficiency from reduced variance. ✅ The more variants there are using a shared (and optimal) control, the higher the benefit.

Valerii Babushkin and Craig Sexauer pointed me to this article which discusses this.

Practically however, it's not a free lunch as it comes with potential tradeoffs and challenges, as I was warned by Ron Kohavi who pointed me to section 7 in the "A/B Testing Intuition Busters" paper.

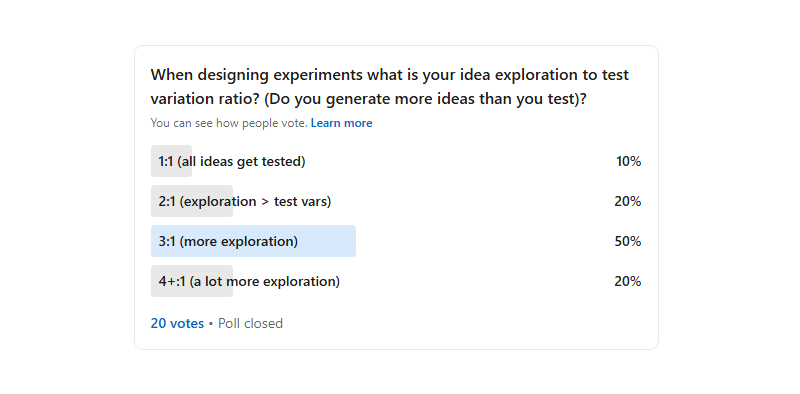

Poll 13: Do you generate & explore more ideas than you test (with some selection / discard process) or do you test all of your ideas?

Poll 14: Is it acceptable to pause & resume an active online experiment?

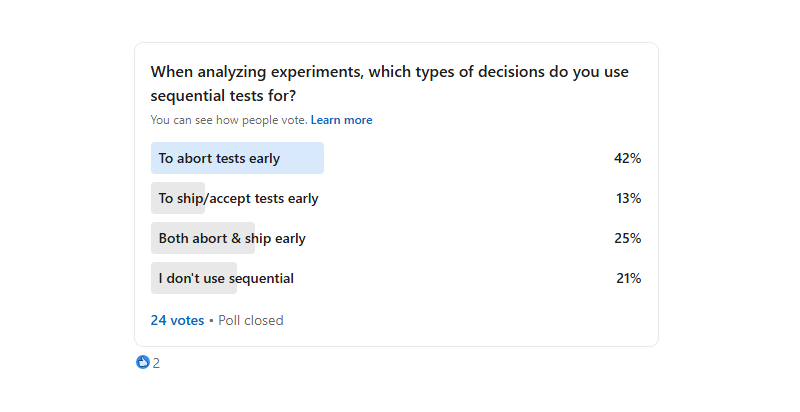

Poll 15: Some experimenters only rely on sequential tests to abort early (ex: negative or undesirable results), while others use it for both abort & ship decisions (ex: neg and positive results). Aside of the various flavors of sequential, what's your take on this?

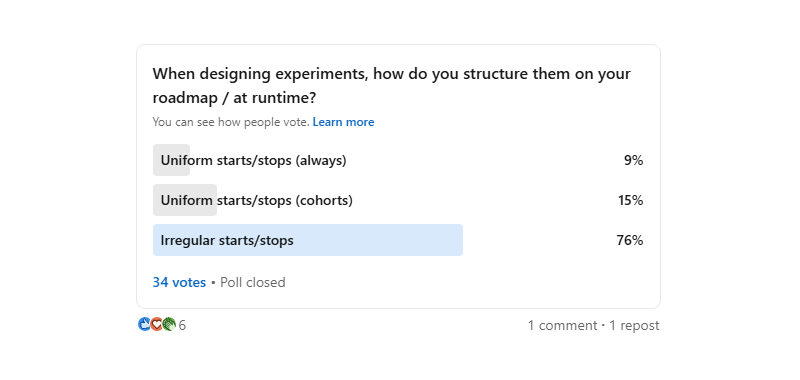

Poll 16:

When designing experiments, how do you structure them on your roadmap / runtime?

A) Uniform Starts/Stops + Always Fixed Duration

All experiments start/end at the same time; All experiments always have the same fixed duration of X.

B) Uniform Starts/Stops + Flexible Duration (Cohorts)

All experiments start/end at the same time; But experiments fall into various "cohorts" with duration being somewhat flexible between different "groups/cohorts".

C) Irregular Starts/Stops

All experiments have varied starts/stops and durations (most flexible).

Here is a visual of these three setups.

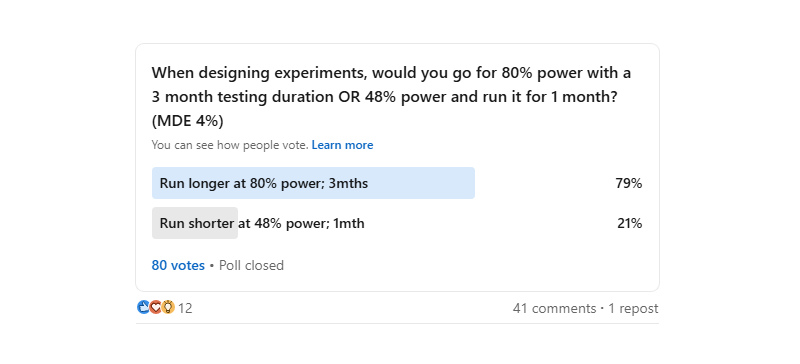

Poll 17:

When designing experiments, would you go for:

A) 80% power with a 3 month testing duration, OR

B) 48% power with a 1 month duration?

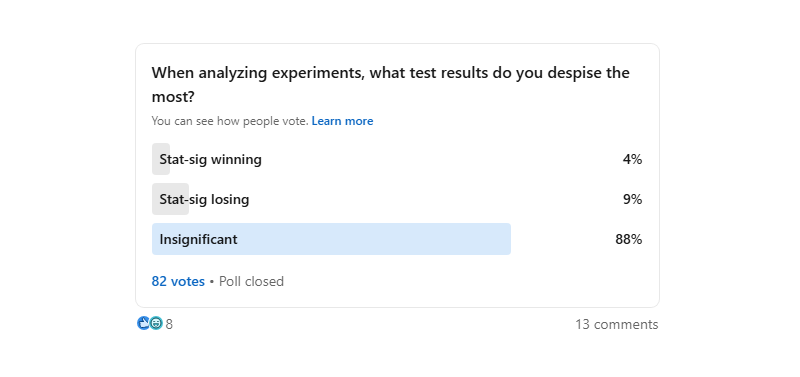

Poll 18: Which test results do you despise the most?

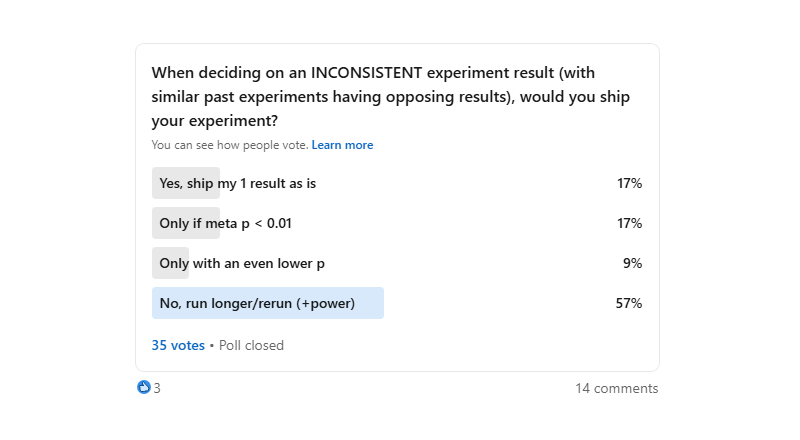

Poll 19:

A stat-sig result is a relatively easy ship decision, but ...

WHAT IF you are aware of other very similar experiments that have inconsistent / opposing and stat-sig results to what you just discovered in your experiment?

Your experiment ✅

Other past experiments: ⛔️⛔️⛔️⛔️

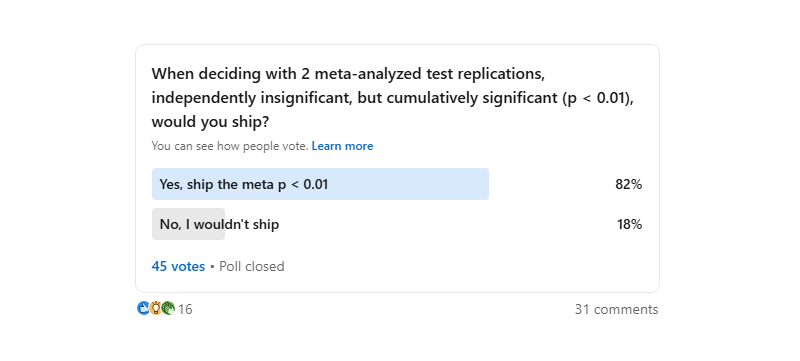

Poll 20:

Here is an interesting a/b test analysis scenario ...

Say you just finished running a replication run because your first a/b test was insignificant and you believed in the change enough that you re-run it. All nice, but results turn out to be insignificant on both experiments:

TEST1 - p value of 0.07194

TEST2 - p value of 0.06195

Would you ship based on a meta-analysis (gaining a potential decision point sooner) or delay the decision into the future so that you have at least 1 (pure?) stat-sig test result to decide with.

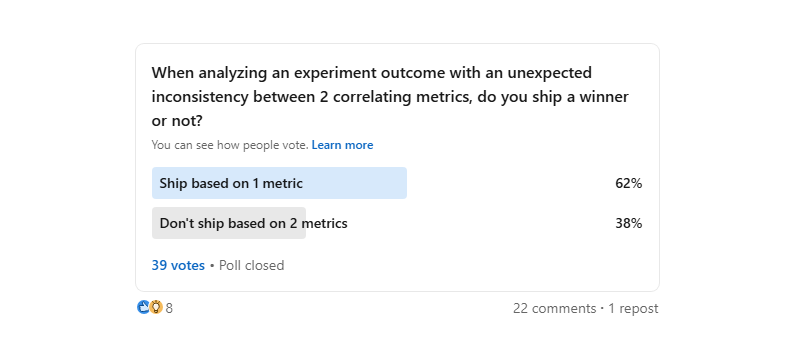

Poll 21:

When you run, say an ecommerce A/B test and you detect an inconsistency between metrics that typically correlate, do you ship or not?

Example:

- SCOPE: test starts on a product detail page

- METRIC 1 (primary) is stat sig. positive to sales (transactions)

- METRIC 2 (secondary but typically correlating) is not stat sig. and negative to checkout visits (signal that is surprisingly inconsistent)

In other words, do you let secondary metrics increase or decrease your confidence in a result and affect your decisions.

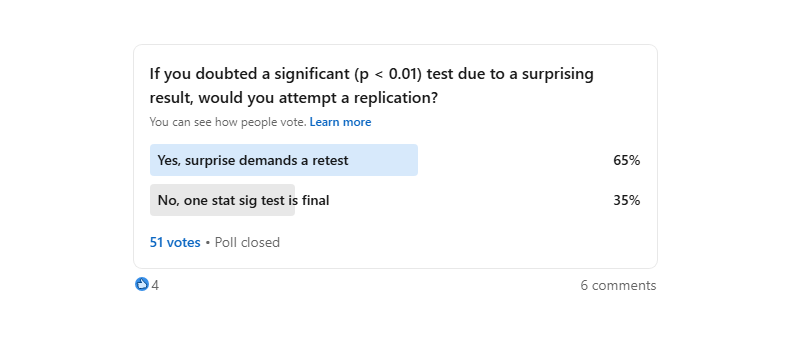

Poll 22: When analyzing a surprisingly statistically significant result < 0.01 what would you do?

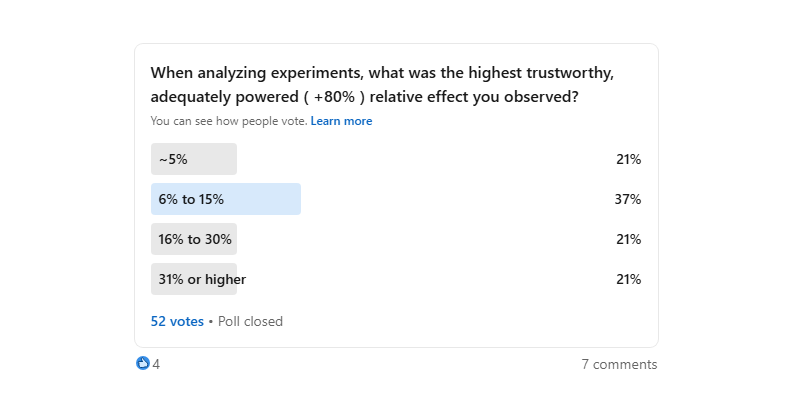

Poll 23: When analyzing online experiments, what was the highest trustworthy effect you observed.

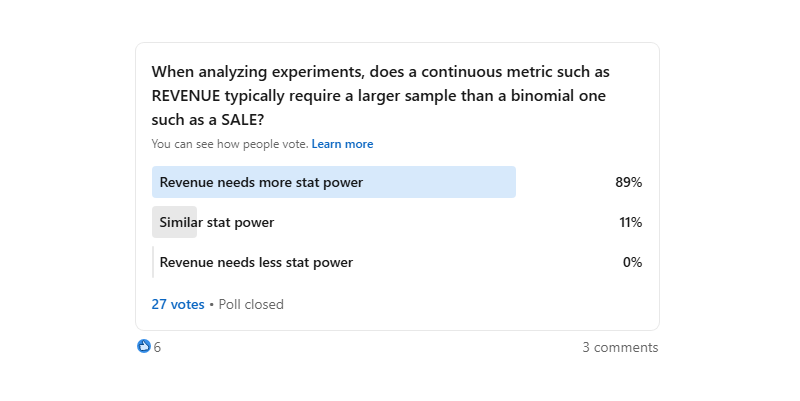

Poll 24: When you measure revenue, do you typically find that it needs a larger or smaller sample to achieve the same stat power as a binomial (ex: such as a transaction or sale)? I understand that the answer is bound to standard deviation of revenue, but what do you typically see?

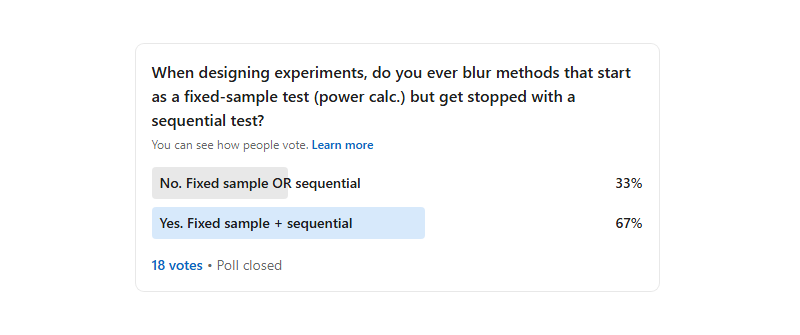

Poll 25: When you design experiments, do you ever blur methods by adding some stopping agility to a fixed sample experiment? Or do you stick to your guns till the end as the experiment was designed.

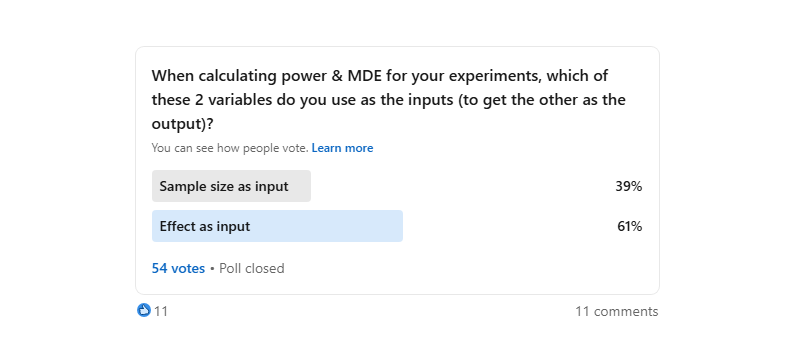

Poll 26: When designing experiments and calculating minimum detectable effects / power, what is your known input? Is it ... sample size or effect estimates first?

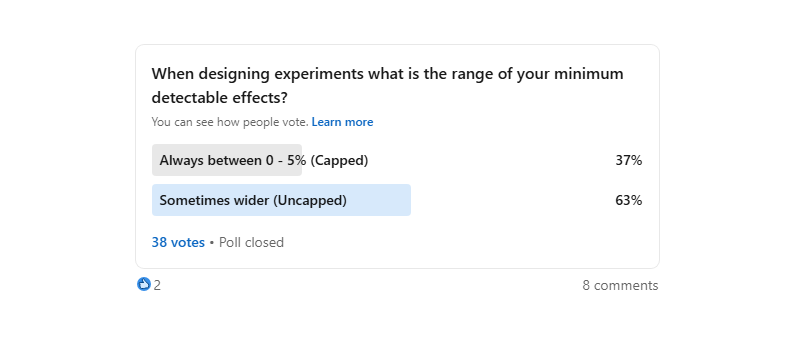

Poll 27: When you perform power calculations for your beautiful online experiments, do you use a capped range with an upper bound (ex: no more than 5%) or do you occasionally estimate your effects even higher beyond 5% (uncapped and more flexibly)?

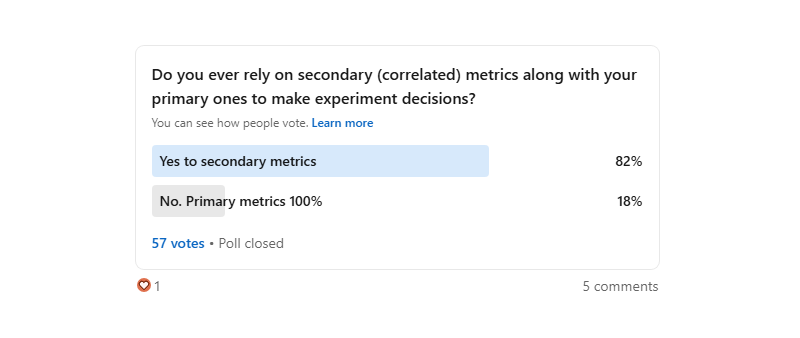

Poll 28: When analyzing experiments, do you let secondary and correlating metrics (such as adds-to-cart, funnel or progression metrics) influence your decision?

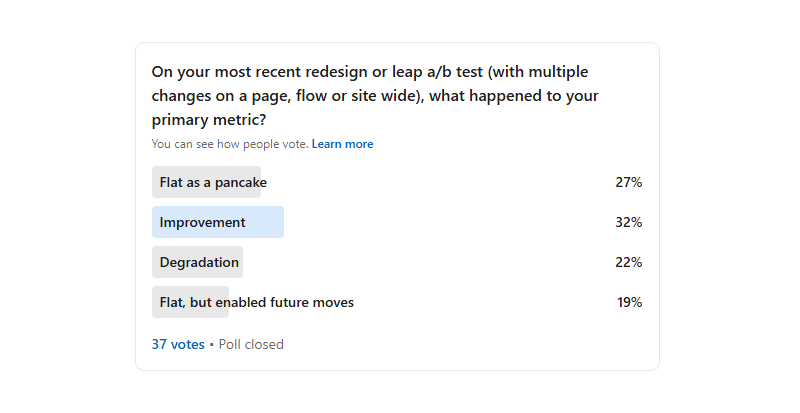

Poll 29: When looking back at experiments you ran, how did that last "redesign" a/b test go for you?

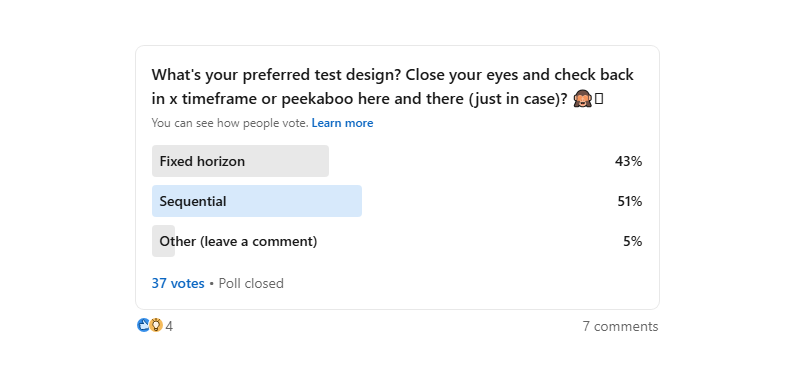

Poll 30: When designing experiments ...

Poll 31: When designing experiments ...

Poll 32: When designing experiments ...

Poll 33: When designing experiments ...

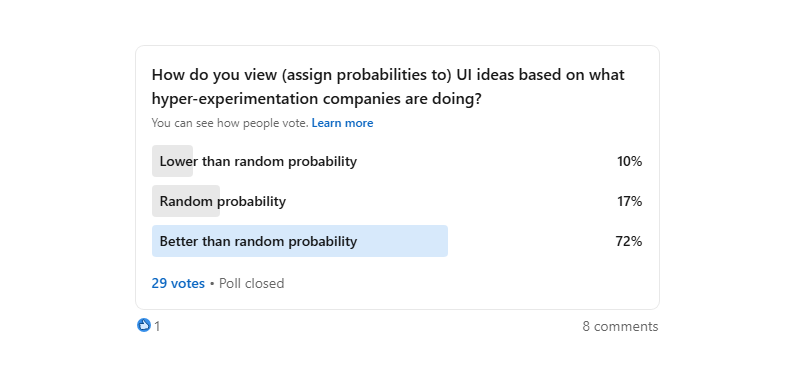

Poll 34: If you observe what experimentation leaders (Amazon, Netflix, Etsy, Bol, Airbnb, Microsoft, Walmart, Best Buy, etc.) are doing, what type of bets are you making on ideas based on their latest UI ideas and iterations? Below random, random, or above random probabilities?

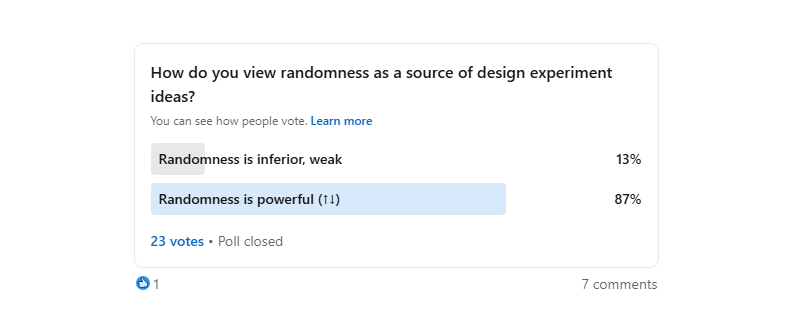

Poll 35: How do you view randomness in experimentation?

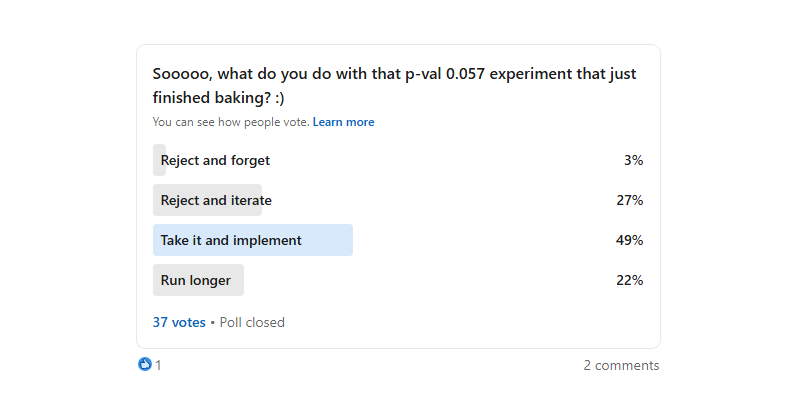

Poll 36: What do you do with your p-val 0.057 experiment?

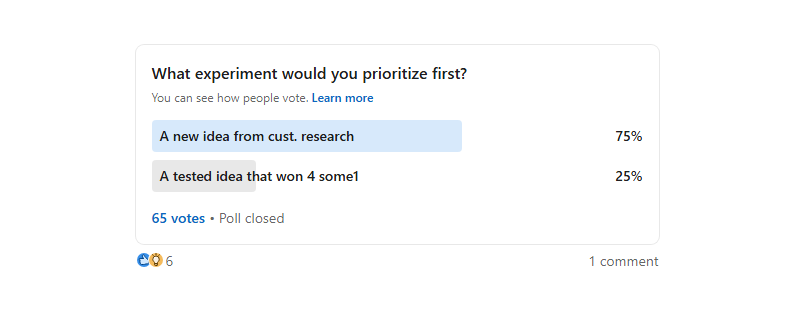

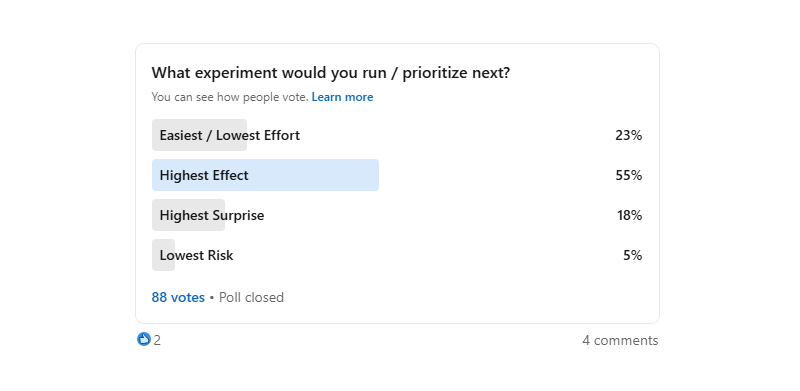

Poll 37: What experiment would you run next?

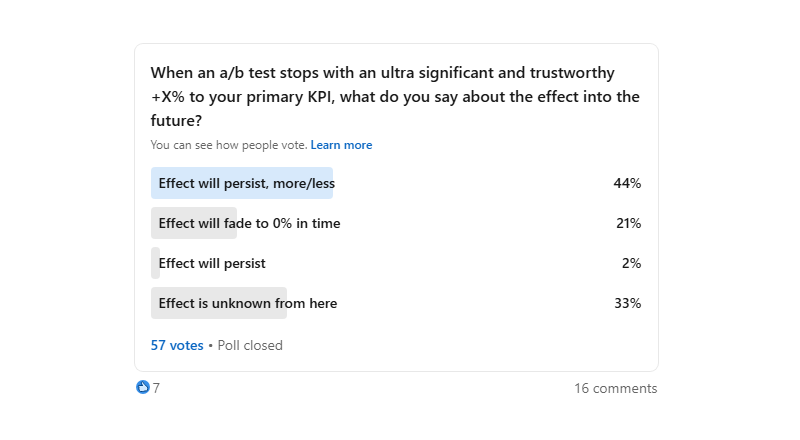

Poll 38: I'm curious what claims experimentation people make after rolling out or implementing an a/b test with some given effect. What do you say will happen to the effect in the future?

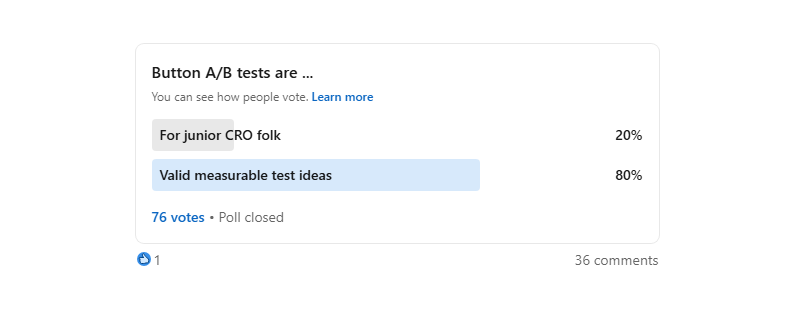

Poll 39: What's your take on those button tests?

Poll 40: So what's your take on the top part of the hierarchy of evidence?

Jakub Linowski on Dec 10, 2025

Jakub Linowski on Dec 10, 2025

Comments